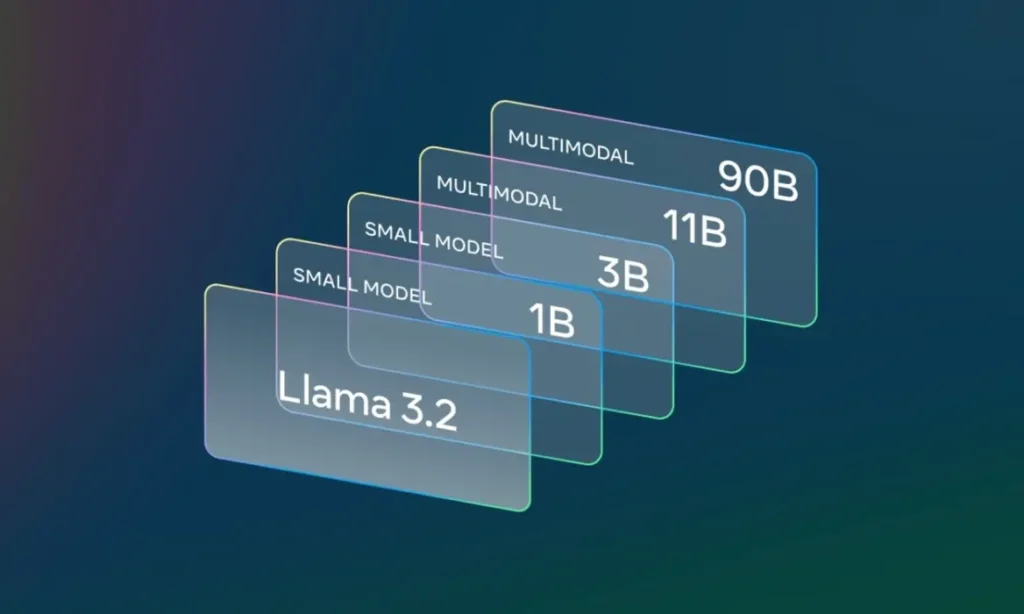

Meta has announced exciting news in the field of Artificial Intelligence: The new Llama 3.2 models are now available, including the first multimodal vision models with 11 billion (11B) and 90 billion (90B) parameters. These models set new standards in image and text processing and are now available on IBM watsonx.

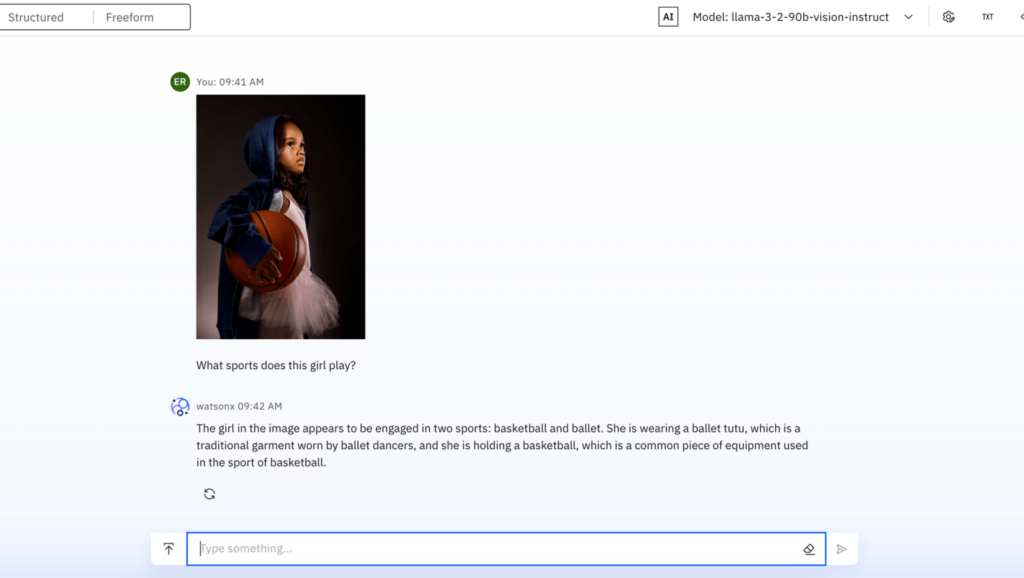

Multimodal Llama 3.2 vision models

The vision models in the Llama 3.2 series are designed to analyze multimodal content, i.e. both images and text, and provide the corresponding information. Whether it’s understanding documents, interpreting diagrams or labeling images – Llama 3.2 Vision is suitable for many different tasks.

High-resolution image processing

The Meta models analyze high-resolution images up to 1120×1120 pixels. This enables classification, object recognition, optical character recognition (OCR), question-and-answer scenarios and data extraction – valuable for companies that want to process visual content automatically.

Efficient multimodal approach

Llama 3.2 Vision uses “Image Reasoning Adapter Weights” to add image processing without changing the language parameters. Benefits: The language capabilities are fully preserved, while only 0.04% of the parameters need to be changed. In addition, computing power is only increased when it is actually needed, resulting in efficient use of resources.

Flexible and lightweight Llama 3.2 variants

In addition to the Vision models, there are also two compact versions of Llama 3.2 with 1B and 3B parameters. These models run on almost any hardware, even on smartphones. They offer low latency on simple hardware and increase privacy as no data is processed externally. These variants are particularly beneficial in privacy-critical environments and can be used locally.

Agentic AI and Llama Guard

The new Llama 3.2 models are suitable for applications such as retrieval augmented generation (RAG) and multilingual summaries. Agentic AI refers to systems that can make decisions and perform tasks independently, making them ideal for complex automation. Another highlight is “Llama Guard” – a multimodal security model to improve AI security, ensuring that the generated content is safe and free of harmful or inappropriate information.

Conclusion: Open source AI with flexibility

With Llama 3.2, Meta shows that open and versatile AI models are becoming increasingly important. They offer high performance, flexibility and scalability without neglecting security and data protection. In combination with IBM watsonx, these models are a big step towards the wider availability of powerful AI.

Want to know how IBM watsonx can help your organization unlock the full potential of your data? Contact us for a free consultation.