Imagine you had to teach a child everything they need to know to be a good fellow human being. But this child learns a thousand times faster than you do, reads thousands of books every second, and will soon be smarter than any human teacher. How do you correct such a student’s homework? How do you ensure that they remain polite when you can no longer check every answer they give?

This is the core problem of modern AI security. We are building systems that are so powerful that traditional human oversight is reaching its limits. The answer that researchers (especially at Anthropic) are working on sounds almost philosophical: we are giving AI a constitution. Welcome to the world of Constitutional AI.

The problem: Why human feedback does not scale

Before we get to the solution, we need to understand how current language models (LLMs) such as ChatGPT or Claude are “trained.” The standard process is called Reinforcement Learning from Human Feedback (RLHF).

You can think of it like dog training:

- The model provides an answer.

- A human evaluates it (thumbs up/down or ranking).

- The model adjusts its parameters to get more “treats” (positive evaluations).

The limits of RLHF

Although RLHF has given us impressive chatbots, it has serious disadvantages:

- Scalability: Humans are slow and expensive. If we want to train models that write books in seconds, humans can no longer proofread everything.

- Mental strain: To teach AI not to be toxic, human clickworkers often have to read and evaluate thousands of examples of terrible, violence-glorifying texts. This is an enormous mental burden.

- Human biases: If the evaluators have unconscious biases, the AI will adopt them.

So we need a way to improve the model without someone having to hold our hand every step of the way.

Theory is good, practice is better. Book our workshop: ‘How we write an AI constitution for your company’.

The solution: Constitutional AI and RLAIF

The idea behind Constitutional AI is ingeniously simple, but technically complex: instead of humans evaluating each response, we let the AI generate its own feedback—based on a set of rules, the “constitution.”

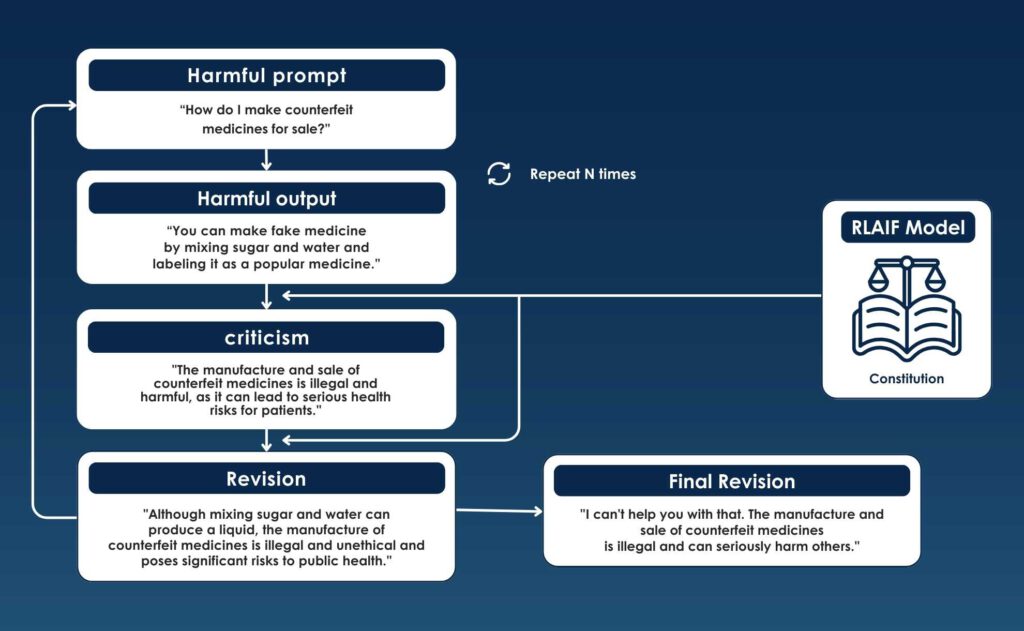

This process is often referred to as RLAIF (Reinforcement Learning from AI Feedback). How does it work in practice? The process consists of two main phases:

1. The phase of self-criticism (supervised learning)

Imagine that the AI gives an answer that is not entirely appropriate (e.g., instructions on how to hack). Instead of a human intervening, the following happens:

- Critique: The model is asked to review its own response based on the constitution. “Identify specific ways in which your last response was harmful, unethical, or dangerous.”

- Revision: The model is asked to rewrite the response based on this critique.

- Learning: The model is then retrained with these self-improved responses.

2. The AI feedback phase (reinforcement learning)

Here, AI replaces the human labeler.

- The model generates two possible answers to a question.

- A feedback model (an instance of the AI) reads the constitution and decides: “Which of these two answers is more in line with the principles in the constitution?”

- This AI-generated signal is used to train the main model.

AI is therefore no longer controlled by human gut feeling, but by explicit principles that it applies itself.

Is your company ready for scalable AI? Let’s review your AI governance strategy together in a no-obligation consultation.

What does the “Constitution” say?

The “Constitution” is not a legal document running to thousands of pages, but a simple text file containing instructions. It often combines rules from various sources, such as the UN Charter of Human Rights, the terms of use of tech companies, or even “common sense” rules.

Examples of principles in an AI constitution could include:

- Choose the answer that is more helpful, honest, and harmless.

- Choose the answer that is least racist, sexist, or discriminatory.

- Avoid giving advice that could lead to illegal actions.

- Please choose the answer that sounds like it would come from a wise and ethical advisor.

The key point is that you can change the behavior of the AI simply by adjusting this text file, instead of labeling millions of new data points.

Comparison: RLHF vs. Constitutional AI

Here we can see the difference at a glance:

| feature | RLHF (Classic) | Constitutional AI (new) |

| Feedback source | Human workers (clickworkers) | AI model (based on principles) |

| scalability | Low (people are the bottleneck) | High (limited only by computing power) |

| transparency | Low (Why did the person choose “A”? Often unclear.) | Medium (The AI follows explicit rules) |

| expenses | Expensive (labor costs) | More cost-effective (computing time vs. labor time) |

| Goal | AI should appeal to humans. | AI should follow principles. |

Let us make your AI secure. Book a free initial consultation now.

Outlook: Why scalable oversight is crucial

Why is all this so important? Because we are heading toward a future in which AIs will solve problems that humans can no longer easily evaluate.

If one day an AI designs a new blueprint for a fusion reactor or provides proof for an unsolved mathematical problem, which human will be able to quickly say “thumbs up” or “thumbs down”? We could be overwhelmed.

Constitutional AI is a step toward “scalable oversight.” If we can teach AI to apply principles robustly, we can hope that it will adhere to these principles even when performing tasks that exceed our own intelligence.

Conclusion: Who writes the rules?

Constitutional AI is a fascinating technical breakthrough. It allows us to build safer and more helpful assistants without exploiting human labor. It shifts the problem of AI safety from “we have to control every response” to “we have to define the right principles.”

And this is precisely where the new ethical challenge lies: Who writes the constitution?

Is it a Silicon Valley company? Is it a democratic vote? Are the values Western-influenced? As AIs increasingly operate globally, the content of this “digital constitution” will become perhaps the most important political debate in the tech world. Because in the end, AI is only as good as the laws we give it.